Explainable AI: The Path To Human-Friendly Artificial Intelligence

Artificial Intelligence (AI) has made significant strides in recent years that revolutionize various industries and aspects of our lives. However, one of the biggest challenges with AI is the lack of transparency and understanding in its decision-making processes. This is where Explainable AI comes into play. In this article, we will delve into the world of Explainable AI and how it bridges the gap between AI and human understanding. Before delving into a detailed exploration of Explainable AI, let's begin by gaining a better understanding of what Explainable AI actually is.

Explainable AI - The Future of Machine Learning

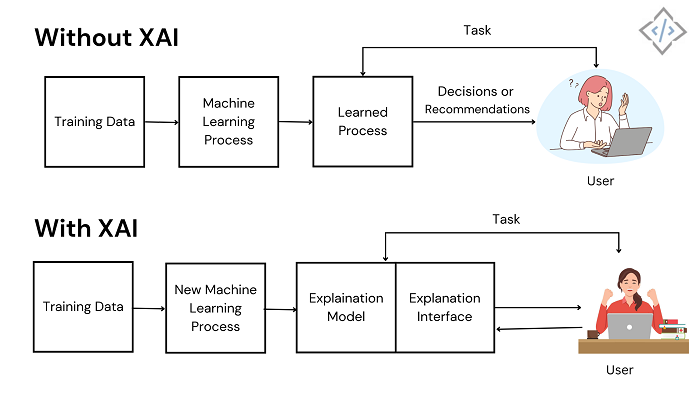

Explainable AI (XAI) is a field of artificial intelligence (AI) and machine learning that focuses on making AI models more understandable and transparent for humans. This is important because many AI models are complex and unclear, which can make it difficult for humans to trust and understand their decisions.

XAI can help bridge the gap between AI and human understanding by providing explanations for AI decisions that identify potential biases and errors in AI models and develop methods for human-AI collaboration. This is also important for several reasons, including:

- Building Trust

When people understand how AI systems make decisions, they are more likely to trust those decisions. This is especially important for high-stakes applications, such as those involving healthcare or finance.

- Debugging and Improving Models

XAI techniques can help developers identify and fix problems with their AI models. This can help to improve the accuracy and reliability of AI systems.

- Ensuring Fairness and Non-Discrimination

XAI can help to identify and mitigate biases in AI systems. This is important to ensure that AI systems are fair and do not discriminate against certain groups of people.

Also Read: Demystifying AI Models: Understand the Heart of Artificial Intelligence

How Explainable AI(XAI) Works

Explainable AI (XAI) tackles the challenge of making complex AI models understandable by humans. Here's how it works:

To identify the most influential features or variables that contributed to the model’s output. Techniques such as permutation importance or SHAP values (SHapley Additive exPlanations) can be used.

Use the LIME (Local Interpretable Model-agnostic Explanations) technique to create simplified, interpretable models locally around each prediction.

Offer insights into the overall behavior of the model across the entire dataset. This can involve visualizations, rule extraction, or model simplification techniques.

1. Input Data Collection

The Explainable process begins with the collection of input data, which includes various types of structured and unstructured data such as text, images, sensor reading, or numerical values. This data serves as the input to the AI model.

2. Model Training

Then, the AI model is trained using the collected data. This model is based on various machine learning techniques such as neural networks, decision trees, support vector machines, or ensemble methods. During the training phase, the model learns patterns and relationships within the data to make predictions or decisions.

3. Model Evaluation

After training, the model is evaluated using validation data to assess its performance metrics such as accuracy, precision, recall, and F1 score. This step helps to ensure that the model generalizes well to new.

4. Explanation Generation

In explainable AI, the goal is to provide insights into how the AI model arrived at its predictions or decisions. This involves generating explanations that are understandable to humans. There are several techniques for explanation generation:

5. Explanation Presentation

Once the explanations are generated, they need to be presented to end-used clearly. This could involve visualizations, natural language explanations, or interactive interfaces that allow users to explore the model's behavior.

6. User Feedback and Iteration

Feedback from users is crucial to improve the effectiveness of XAI systems. Users may provide insights into the usefulness and comprehensibility of the explanations, which can inform future interactions of the AI model or the explanation generation process.

7. Monitoring and Maintenance

XAI systems should be continuously monitored to ensure that explanations remain accurate and up-to-date as data distributions or model behavior change over time. Regular maintenance and updates may be necessary to address drift or other issues.

By following these steps, Explainable AI enables users to gain insights into AI model behavior, understand the reasoning behind predictions or decisions, and ultimately build trust in AI systems. This transparency and interpretability are essential for deploying AI in high-stakes domains such as healthcare, finance, and autonomous vehicles.

Explainable AI(XAI) Algorithms

Explainable AI algorithms emerge as invaluable tools. Provide reports that clarify the inner workings of these models and attempt to demystify their decision-making processes. Here we delve into prominent explainable AI algorithms.

LIME (Local Interpretable Model-Agnostic Explanations)

LIME is a technique that can be used to explain any type of machine learning model, regardless of its complexity. It works by creating a simplified local model that approximates the behavior of the original model around a particular input. This simplified model can then be explained using more traditional techniques.

SHAP (SHapley Additive exPlanations)

SHAP is a technique that assigns a contribution score to each feature in a dataset for a particular prediction. This score indicates how much each feature contributed to the prediction and can be used to explain why the model made the prediction it did.

DALEX (Data-driven Automated Learning EXplanations)

DALEX is an open-source Python library that provides a unified interface for explaining various types of machine-learning models. It supports a variety of explanation methods, including LIME, SHAP, and partial dependence plots (PDPs).

Eli5 (Explain Like I'm 5)

Eli5 is a Python package that provides a simple and intuitive API for explaining machine learning models. It supports a variety of explanation methods, including feature importance, PDPs, and permutation importance.

Captum

Captum is a Python toolkit for explaining the predictions of PyTorch models. It provides a variety of explanation methods, including LIME, SHAP, and gradient-based methods.

Microsoft Interpret ML

Microsoft Interpret ML is a toolkit for explaining the predictions of machine learning models. It provides a variety of explanation methods, including feature importance and PDPs.

Each of these algorithms has its own strengths and weaknesses, so the best choice for a particular application will depend on the specific needs of the user. However, all of these algorithms are valuable tools for making AI models more transparent and understandable to humans.

Challenges of Explainable AI

Explainable AI (XAI) is a field of artificial intelligence that focuses on making AI models more transparent and understandable to humans. However, there are several challenges that need to be addressed in order to make XAI a reality.

Complexity of AI Models

Many AI models are complex and difficult to understand, even for experts. This makes it difficult to explain how these models make decisions.

Lack of Training Data

In some cases, there may not be enough training data available to explain how an AI model makes decisions. This is a particular challenge for models that are trained on large amounts of data, such as deep learning models.

The Trade-Off Between Accuracy and Explainability

In many cases, there is a trade-off between accuracy and explainability. Models that are more accurate are often less explainable, and vice versa. This is because more accurate models tend to be more complex.

Subjectivity of Explanations

Explanations of AI models can be subjective and depend on the perspective of the person who is interpreting the model. This can make it difficult to create explanations that are both accurate and understandable.

Communication of Explanations

It can be difficult to communicate explanations of AI models to non-experts. This is because explanations often require a technical understanding of the model.

Despite these challenges, XAI is an important field of research that is essential for ensuring that AI is used in a responsible and ethical manner. As XAI continues to develop, it is likely to play an increasingly important role in our lives.

Key Difference Between Explainable AI vs. Interpretable AI

Both explainable AI (XAI) and interpretable AI are crucial aspects of building trustworthy and reliable AI systems. While the terms are often used interchangeably, they have subtle differences in focus and application.

Interpretability in AI delves into the inner workings of the AI model. It focuses on understanding the relationships between the model inputs and outputs. An interpretable AI model allows us to see how specific words or phrases in an email trigger spam classification. This transparency is valuable for debugging the model, identifying potential biases, and ensuring its logic aligns with expectations.

On the other hand, Explainability focuses on explaining the decisions made by AI systems in a human-understandable way. It provides insights into why a particular input resulted in a specific output. The explainable model is important for building trust with users and ensuring fairness in AI decisions.

| Feature | Interpretability | Explainability |

|---|---|---|

| Focus | Inner workings of the model | Specific decisions made by the model |

| Transparency | High transparency - allows understanding of relationships between inputs and outputs | Medium transparency - provides explanations without necessarily revealing inner workings |

| Explanation Level | High-level overview of factors influencing decisions | Detailed analysis of internal mechanisms |

| Techniques | Decision trees, rule-based systems | LIME, SHAP (SHapley Additive exPlanations) |

| Use Cases | Debugging, bias detection, understanding model behavior | Building trust, justifying decisions, offering user-friendly feedback |

Both interpretable and explainable AI plays a crucial role in making AI more trustworthy and reliable. The choice between these two depends on the specific needs of the application. If high transparency is paramount, interpretable models are preferred.

Key Difference Between Explainable AI vs. Responsible AI

With the increasing demand for AI, it is necessary to focus on building trust and ensure responsible development. Two key concepts come into play here: Explainable AI(XAI) and Responsible AI(RAI). But these concepts are different from each other.

Explainable AI focuses on the decision-making process of an AI model understandable to humans. It’s like having a window into the “black box” of AI that allows users to comprehend the rationale behind the model output.

Responsible AI is a broader concept that encompasses the ethical, and societal implications of AI development and deployment. It’s about using AI to benefit humanity and minimize risks.

| Feature | Explainable AI (XAI) | Responsible AI (RAI) |

|---|---|---|

| Focus | Understanding AI decisions | Ethical and responsible development of AI |

| Scope | Specific model explanations | Broader principles for AI development and deployment |

| Techniques | LIME, SHAP | Diverse approaches for each principle (e.g., fairness metrics, privacy-preserving techniques) |

| Benefits | Trust, user confidence, debugging | Mitigates risks, promote trust, ensures ethical AI |

| Drawbacks | Less transparency (complex models), potential misinterpretation | Balancing principles can be complex |

| Model Complexity | Can be applied to complex models | Applicable to all models |

XAI is a tool within the broader framework of RAI. While XAI helps users understand AI decisions, RAI ensures the entire AI development process is ethical and responsible. Together, they pave the way for trustworthy and beneficial AI applications.

Real-World Applications of Explainable AI

Explainable AI (XAI) is important because AI models are becoming increasingly complex and are being used in more and more critical applications, such as healthcare, finance, and criminal justice. With XAI, it can be easier to understand how an AI model made a particular decision, which can lead to a lack of trust and confidence in the model.

1. Healthcare

In the healthcare industry, Explainable AI is invaluable for assisting doctors in diagnosing diseases and making treatment decisions. XAI can provide clear explanations for a specific diagnosis or suggest treatment options which allows healthcare professionals to make informed choices.

2. Finance

Explainable AI is also widely used in the financial sector for credit scoring, fraud detection, and investment decisions. By understanding how AI models arrive at financial predictions, institutions can ensure fairness and transparency in their operations.

3. Autonomous Vehicles

In the development of autonomous vehicles, Explainable AI is crucial for safety. It enables vehicle operators and passengers to comprehend why a self-driving car makes certain decisions, which is essential for trust and safety.

4. Criminal Justice

XAI can be used to explain why an AI model flagged a particular suspect as being more likely to commit a crime. This can help law enforcement officers make more informed decisions about how to allocate their resources and investigate crimes.

5. Recommender Systems

XAI can be used to explain why a recommender system is suggesting a particular product or service to a user. This can help users understand why the system is making the recommendations it is making and make more informed decisions about what to purchase or consume.

Conclusion

By making AI decisions transparent and understandable, XAI enhances trust, compliance, and the overall utility of artificial intelligence. In an ever-evolving world where AI plays an increasingly significant role, Explainable AI is a critical component in ensuring that technology serves us well and in a way that we can comprehend.

As the field of XAI continues to advance, we can look forward to more transparent and trustworthy AI systems that benefit society as a whole. Hire experienced AI ML developers from CodeTrade and get assured project delivery. Stay tuned with CodeTrade India and get the latest technology updates.