Ensemble Models For Flower Image Classification In Deep Learning: A New Era

Flowers have captivated human beings for centuries with their beauty and diversity. From the vibrant colors of tulips to the delicate petals of roses, the world of flowers is a rich tapestry of nature's artistry. But what if we could teach machines to appreciate and recognize this beauty as well? In the new era of deep learning, we can do just that. In this blog, we will explore how flower image classification is done with ensemble models in deep learning.

Overview of Image Classification in Deep Learning

Flower image classification is a challenging task in computer vision, due to the large variety of flower species and the complex visual features of flowers. Deep learning models have achieved state-of-the-art results on flower image classification tasks, but they can be sensitive to the choice of model architecture, hyperparameters, and training data.

Ensemble models, a powerful technique to improve the performance of deep learning models. It combines the predictions of multiple base models to produce more accurate predictions. This is because ensemble models can reduce the variance of individual base models and learn more complex patterns in the data.

Let’s start with how to perform flower image classification using ensemble models in TensorFlow. Here, we’ll create an ensemble of 3 different models: a Simple CNN, A Densenet, and a Squeeze-and-Excitation Block-based model. This ensemble approach can lead to better classification performance by leveraging the strengths of each model.

Data Preparation

Before diving into the model architectures, we need to prepare our dataset. You can use a dataset from Kaggle. Make sure to split the data into training and validation sets.

1. Simple CNN

CNNs are a powerful tool for image classification and other computer vision tasks. It can be used to classify images into different categories, detect objects in images, and even generate new images.

Simple CNN architecture is used as a starting point for more complex models. The convolutional layers extract features from the input image, and the pooling layers reduce the dimensionality of the output. The fully connected layer then classifies the image into one of 10 categories.

We'll start with a basic Convolutional Neural Network (CNN) architecture. Here's a simplified code snippet for a simple CNN model:

from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense simple_cnn_model = Sequential([ layers.Conv2D(16, 3, padding='same', activation='relu', input_shape=(224, 224, 3)), layers.MaxPooling2D(), layers.Conv2D(32, 3, padding='same', activation='relu'), layers.MaxPooling2D(), layers.Conv2D(64, 3, padding='same', activation='relu'), layers.MaxPooling2D(), layers.Flatten(), layers.Dense(128, activation='relu'), # As we have 10 classes of flowers you can modify them according to your dataset. layers.Dense(10,activation='softmax') ])

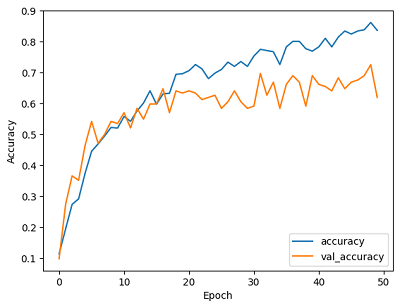

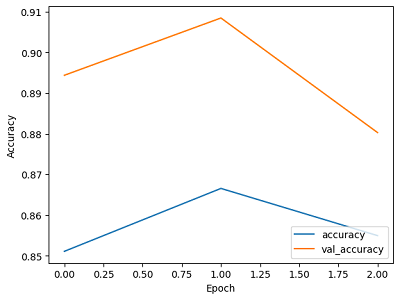

Result of the Simple CNN trained model’s accuracy.

2. DenseNet

DenseNet models can be used for a variety of image classification tasks, such as animal image classification, objects, or scenes. Also used for other computer vision tasks, such as object detection and segmentation.

Also Read: Animal Image Classification with Transfer Learning: The Ultimate Guide

It is a more complex than convolutional neural network (CNN) architecture that can capture intricate patterns in images more effectively. It can be used with TensorFlow by importing the tf.keras.applications.densenet module and instantiating a DenseNet model class.

import tensorflow as tf from tensorflow.keras import layers from tensorflow.keras.applications.densenet import DenseNet201 densenet_model = DenseNet201(include_top=False, input_shape=(224,224,3)) for layer in densenet_model.layers: layer.trainable = False Flattened_layer = layers.Flatten()(densenet_model.output) # As we have 10 classes of flowers you can modify it according to your dataset. output_layer = layers.Dense(10, activation='softmax')(Flattened_layer) final_model = tf.keras.models.Model(inputs=densenet_model.input, outputs=output_layer)

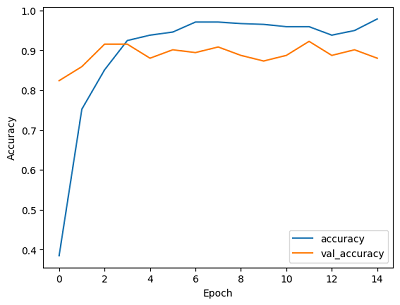

Here’s the trained Densenet model’s accuracy results.

3. Squeeze-and-Excitation Block with Skip Connections

The Squeeze-and-Excitation (SE) block is a technique that can be used to improve the performance of convolutional neural networks (CNNs). It works by recalibrating the feature maps, which are the outputs of the convolutional layers. The SE block can be implemented in TensorFlow and used in your model as follows:

import tensorflow as tf

# Squeeze-and-Excitation Block

def se_block(x, filter, reduction_ratio=16):

x_skip = x

x = tf.keras.layers.Conv2D(filter, (3, 3), padding='same')(x)

x = tf.keras.layers.BatchNormalization(axis=3)(x)

x = tf.keras.layers.Activation('relu')(x)

x = tf.keras.layers.Conv2D(filter, (3, 3), padding='same')(x)

x = tf.keras.layers.BatchNormalization(axis=3)(x)

# Squeeze-and-Excitation

se = tf.keras.layers.GlobalAveragePooling2D()(x)

se = tf.keras.layers.Dense(filter // reduction_ratio, activation="relu", kernel_initializer='he_normal')(se)

se = tf.keras.layers.Dense(filter, activation="sigmoid", kernel_initializer='he_normal')(se)

se = tf.keras.layers.Reshape((1, 1, filter))(se)

x = x * se # Element-wise multiplication

x = tf.keras.layers.Add()([x, x_skip])

x = tf.keras.layers.Activation('relu')(x)

return x

# Modified ResNet-like model with SE blocks and skip connections

def SqueezeAndExcitation_with_skipconnections(shape=(224, 224, 3), classes=10):

x_input = tf.keras.layers.Input(shape)

x = tf.keras.layers.Conv2D(64, kernel_size=3, strides=2, padding='same')(x_input)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Activation('relu')(x)

x = tf.keras.layers.MaxPool2D(pool_size=3, strides=2, padding='same')(x)

x = se_block(x, 64) # Apply SE block

x = tf.keras.layers.Conv2D(64, kernel_size=3, strides=2, padding='same')(x)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Activation('relu')(x)

x = tf.keras.layers.MaxPool2D(pool_size=3, strides=2, padding='same')(x)

x = tf.keras.layers.AveragePooling2D((2, 2), padding='same')(x)

x = tf.keras.layers.Flatten()(x)

x = tf.keras.layers.Dense(512, activation='relu', kernel_initializer='he_normal')(x)

x = tf.keras.layers.Dense(classes, activation='softmax')(x)

model = tf.keras.models.Model(inputs=x_input, outputs=x, name="SqueezeAndExcitation")

return model

# Instantiate the model

se_with_skip_connection_model = SqueezeAndExcitation_with_skipconnections()

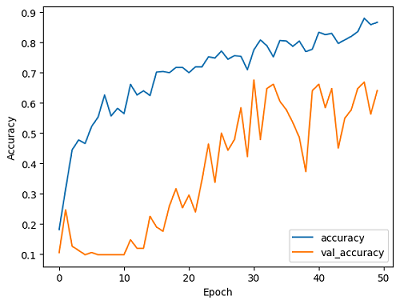

Here’s the result of the model's accuracy.

Deep Learning Ensemble Model

Using Ensemble models we can perform variety of tasks, such as image classification, object detection, and natural language processing. They are a powerful tool for improving the performance of machine learning models.

These models perform better than individual models because they can reduce the variance of the predictions. This is because the ensemble model's predictions are an average of the predictions from the individual models. If one of the individual models makes a mistake, the other models can help to correct it.

Once we have trained three CNN models, we can create an ensemble of these three models to improve the overall performance. To do this, we need to replace the paths to the trained weights from the previously trained models in the following code snippet:

simple_model = tf.keras.models.load_model('path to simple CNN model trained weights')

simple_model = tf.keras.models.Model(inputs=simple_model.inputs, outputs=simple_model.outputs, name='simple_model')

densenet_model = tf.keras.models.load_model('path to densenet model trained weights')

modedensenet_modell_1 = tf.keras.models.Model(inputs=densenet_model.inputs, outputs=densenet_model.outputs, name='densenet_model')

se_with_skip_connection_model = tf.keras.models.load_model('path to squeeze and excitation model trained weights')

model_1 = tf.keras.models.Model(inputs=se_with_skip_connection_model.inputs, outputs=se_with_skip_connection_model.outputs, name='se_with_skip_connection_model')

models = [simple_model, densenet_model, se_with_skip_connection_model]

model_input = tf.keras.layers.Input(shape=(224, 224, 3))

model_outputs = [model(model_input) for model in models]

ensemble_output = tf.keras.layers.Average()(model_outputs)

ensemble_model = tf.keras.models.Model(inputs=model_input, outputs=ensemble_output, name='ensemble')

# Compiling the ensemble model

ensemble_model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

early_stopping = tf.keras.callbacks.EarlyStopping(monitor='val_loss', mode='min', verbose=1)

Training and Evaluation

Train each model on the training data and evaluate their performance on the validation set. After that, combine their predictions using the ensemble model and evaluate its accuracy.

# Combine predictions using the ensemble model

history = ensemble_model.fit(

train_generator,

epochs=50,

validation_data=validation_generator, callbacks=[early_stopping]

)

ensemble_accuracy = ensemble_model.score(X_val, y_val)

The result of the ensemble model's accuracy is the average accuracy of the individual models in the ensemble. This is because the ensemble model simply averages the predictions of the individual models to produce a final prediction.

Final Result of Ensemble Model

Conclusion

In this blog, we've explored how to perform flower image classification using an ensemble of deep-learning models. We implemented three different models: a Simple CNN, DenseNet, and a model with a Squeeze-and-Excitation Block with skip connections. By combining their predictions using an ensemble approach, we can often achieve better classification accuracy.

This technique applies in various image classification tasks to improve the robustness and reliability of the model's predictions. You can further fine-tune the model, adjust hyperparameters, and evaluate its performance on test data to ensure it meets your classification needs.

We at CodeTrade, a leading AI & ML development company committed to developing and deploying cutting-edge ensemble models for flower image classification. We believe that these models have the potential to revolutionize the way we interact with the natural world.

If you are interested in learning more about ensemble models for flower image classification, or if you need help developing and deploying your models, contact us today. We would be happy to discuss your specific needs and help you achieve your goals.